The rise of generative AI (gen AI) is inspiring organizations to envision a future in which AI is integrated into all aspects of their operations for a more human, personalized and efficient customer experience. However, getting the required compute infrastructure into place, particularly GPUs for large language models (LLMs), is a real challenge. Accessing the necessary resources from cloud providers demands careful planning and up to month-long wait times due to the high demand for GPUs. And once GPUs are obtained, operating the infrastructure requires specialized expertise and increases overhead costs that can constrain delivery of innovation.

To help organizations make such a complex vision a reality — one grounded by easy access to a GPU infrastructure without additional operational overhead — Snowflake provides customers with access to industry-leading LLMs via serverless functions in Snowflake Cortex (in private preview). And today we are excited to announce the public preview of Snowpark Container Services, which provides developers with elastic, on-demand compute powered with GPUs for all types of custom LLM app development and advanced use cases.

With the public preview of this new Snowpark runtime that helps developers effortlessly register and deploy container images in their Snowflake account, customers with capacity commitments get fast access to GPU infrastructure. There is no need to self-procure instances or make reservations with their public cloud provider.

Within the scope of gen AI, this new Snowpark runtime empowers developers to efficiently and securely deploy containers to do things like the following and more:

- LLM fine-tuning

- Open-source vector database deployment

- Distributed embedding processing

- Voice to text transcription

Why did Snowflake build a container service?

To expand the capabilities of the Snowflake engine beyond SQL-based workloads, Snowflake launched Snowpark, which added support for Python, Java and Scala inside virtual warehouse compute. And to extend flexibility to virtually any language, framework or library as well as hardware of choice including GPUs, Snowflake built Snowpark Container Services.

With Snowflake continuing to bring compute to the data, developers benefit from being able to eliminate additional pipeline development and management, as well as from reduced latencies related to moving large volumes of data to separate platforms. And because Snowpark Container Services is designed with data-intensive processing in mind, developers can effortlessly load and process millions of rows of data.

Such is the case of the engineering team at Sailpoint, whose identity security model went from a complex orchestration pipeline to a single execution task. You can read more about their experience with Snowpark Container Services in this two-part blog series (part 1, part 2).

What makes Snowpark Container Services unique?

Integrated governance: Snowpark Container Services runs within the same Snowflake Horizon governance framework as the data. By having the same governance over data and compute, developers can more effortlessly ensure data is protected to streamline security reviews for new developments.

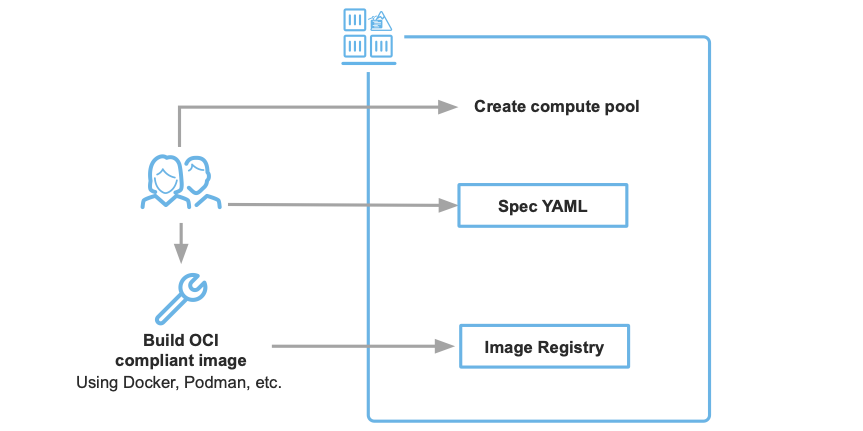

Unified services experience: Snowpark Container Services lowers the operational burden involved with deploying containers because it comes with integrated image registry, elastic compute infrastructure and a managed Kubernetes-based cluster alongside other necessary services required to run containers in production (see Figure 1).

Streamlined pricing: The integrated services experience also brings more streamlined pricing. Snowpark Container Services charges for compute and storage only, meaning there is no separate billing for the registry, gateway, logs and everything else needed for production deployments.

Best of all, all it takes to go from a container image in your development environment to a scalable service in the cloud are two artifacts: the container image and a spec YAML file.

What technical enhancements were made during the private preview?

- Improved security and governance: We enhanced control over security aspects, including egress, ingress and networking. Register here to learn more about this in our upcoming security deep dive tech talk.

- Increased storage options: We added more diverse storage solutions, including local volumes, memory and Snowflake stages. And we are expanding to include block storage (currently in private preview).

- More diverse instance types: We introduced high-memory instances and dynamic GPU allocation for intensive workloads.

Ready to get started?

The team is moving fast to make Snowpark Container Services available across all AWS regions, with support for other clouds to follow.

Here are a few resources to help you get started:

- Deploy your first container in Snowflake (Note: Snowpark Container Services is not available for free trial accounts). Quickstart

- Get notified when new regions become available. Github

- Learn more about Snowpark Container Services. Documentation

- See how Snowpark ML plus Snowpark Container Services simplifies LLM deployment. Demo

- Join a technical talk on the future of enterprise AI apps with the VP of Product from Landing AI. Register

We look forward to seeing all the cool things you are building in the Data Cloud with this new runtime that enables near limitless development flexibility.

The post Unlock the New Wave of Gen AI With Snowpark Container Services GPU-Powered Compute appeared first on Snowflake.