Introduction to RAG

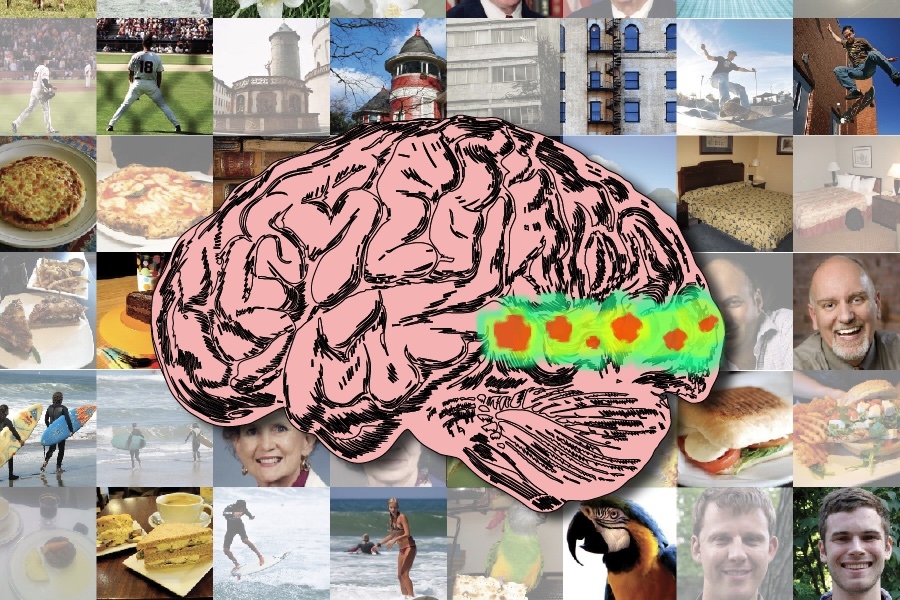

In the constantly evolving world of language models, one steadfast methodology of particular note is Retrieval Augmented Generation (RAG), a procedure incorporating elements of Information Retrieval (IR) within the framework of a text-generation language model in order to generate human-like text with the goal of being more useful and accurate than that which would be generated by the default language model alone. We will introduce the elementary concepts of RAG in this post, with an eye toward building some RAG systems in subsequent posts.

RAG Overview

We create language models using vast, generic datasets that are not tailored to your own personal or customized data. To contend with this reality, RAG can combine your particular data with the existing “knowledge” of a language model. To facilitate this, what must be done, and what RAG does, is to index your data to make it searchable. When a search made up of your data is executed, the relevant and important information is extracted from the indexed data, and can be used within a query against a language model to return a relevant and useful response made by the model. Any AI engineer, data scientist, or developer interested building chatbots, modern information retrieval systems, or other types of personal assistants, an understanding of RAG, and the knowledge of how to leverage your own data, is vitally important.

Simply put, RAG is a novel technique that enriches language models with input retrieval functionality, which enhances language models by incorporating IR mechanisms into the generation process, mechanisms that can personalize (augment) the model’s inherent “knowledge” used for generative purposes.

To summarize, RAG involves the following high level steps:

- Retrieve information from your customized data sources

- Add this data to your prompt as additional context

- Have the LLM generate a response based on the augmented prompt

RAG provides these advantages over the alternative of model fine-tuning:

- No training occurs with RAG, so there is no fine-tuning cost or time

- Customized data is as fresh as you make it, and so the model can effectively remain up to date

- The specific customized data documents can be cited during (or following) the process, and so the system is much more verifiable and trustworthy

A Closer Look

Upon a more detailed examination, we can say that a RAG system will progress through 5 phases of operation.

- Load: Gathering the raw text data — from text files, PDFs, web pages, databases, and more — is the first of many steps, putting the text data into the processing pipeline, making this a necessary step in the process. Without loading of data, RAG simply cannot function.

- Index: The data you now have must be structured and maintained for retrieval, searching, and querying. Language models will use vector embeddings created from the content to provide numerical representations of the data, as well as employing particular metadata to allow for successful search results.

- Store: Following its creation, the index must be saved alongside the metadata, ensuring this step does not need to be repeated regularly, allowing for easier RAG system scaling.

- Query: With this index in place, the content can be traversed using the indexer and language model to process the dataset according to various queries.

- Evaluate: Assessing performance versus other possible generative steps is useful, whether when altering existing processes or when testing the inherent latency and accuracy of systems of this nature.

A Short Example

Consider the following simple RAG implementation. Imagine that this is a system created to field customer enquiries about a fictitious online shop.

- Loading: Content will spring from product documentation, user reviews, and customer input, stored in multiple formats such as message boards, databases, and APIs.

- Indexing: You will produce vector embeddings for product documentation and user reviews, etc., alongside the indexing of metadata assigned to each data point, such as the product category or customer rating.

- Storing: The index thus developed will be saved in a vector store, a specialized database for the storage and optimal retrieval of vectors, which is what embeddings are stored as.

- Querying: When a customer query arrives, a vector store databases lookup will be done based on the question text, and language models then employed to generate responses by using the origins of this precursor data as context.

- Evaluation: System performance will be evaluated by comparing its performance to other options, such as traditional language model retrieval, measuring metrics such as answer correctness, response latency, and overall user satisfaction, to ensure that the RAG system can be tweaked and honed to deliver superior results.

This example walkthrough should give you some sense of the methodology behind RAG and its use in order to convey information retrieval capacity upon a language model.

Conclusion

Introducing retrieval augmented generation, which combines text generation with information retrieval in order to improve accuracy and contextual consistency of language model output, was the subject of this article. The method allows the extraction and augmentation of data stored in indexed sources to be incorporated into the generated output of language models. This RAG system can provide improved value over mere fine-tuning of language model.

The next steps of our RAG journey will consist of learning the tools of the trade in order to implement some RAG systems of our own. We will first focus on utilizing tools from LlamaIndex such as data connectors, engines, and application connectors to ease the integration of RAG and its scaling. But we save this for the next article.

In forthcoming projects we will construct complex RAG systems and take a look at potential uses and improvements to RAG technology. The hope is to reveal many new possibilities in the realm of artificial intelligence, and using these diverse data sources to build more intelligent and contextualized systems.

Matthew Mayo (@mattmayo13) holds a Master’s degree in computer science and a graduate diploma in data mining. As Managing Editor, Matthew aims to make complex data science concepts accessible. His professional interests include natural language processing, machine learning algorithms, and exploring emerging AI. He is driven by a mission to democratize knowledge in the data science community. Matthew has been coding since he was 6 years old.