Trustworthy AI is dependent on a solid foundation of data.

If you bake a cake with missing, expired or otherwise low-quality ingredients, it will result in a subpar dessert. The same holds for developing AI systems to handle large amounts of data.

Data is at the heart of every AI model. Using biased, sensitive or incorrect data in an AI system will produce results reflecting those issues. If your inputs are low quality, your results will be similar. These flaws can easily doom an AI project.

Responsible and trustworthy AI systems do not happen by accident. They are a product of thoughtful design and consideration. Managing data is the second step in a series of blog posts detailing questions to be asked at each of the five pivotal steps of the AI life cycle. These steps – questioning, managing data, developing the models, deploying insights and decisioning – represent the stages where thoughtful consideration paves the way for an AI ecosystem that aligns with ethical and societal expectations.

To ensure we are using the right data, we need to ask questions about the data used in an AI system. Is this the right data to be using? Are we using data that includes protected classes (e.g., race, gender) we are legally prohibited from using? Do we need to perform transformations or imputations on the data? These questions and more must be asked, such as:

Does your data contain any sensitive or privileged information?

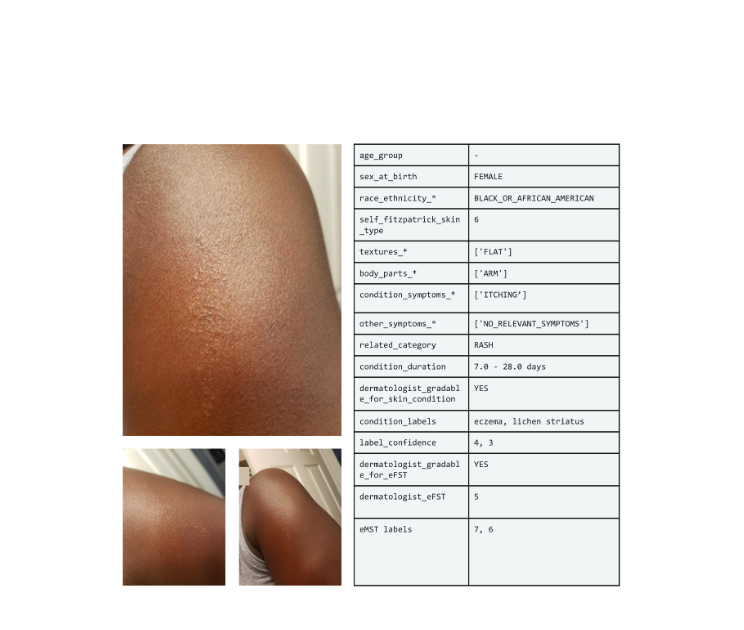

Not all data is created equal and not all data needs to be protected equally. Data classifications range from publicly available (freely available for anyone) to restricted or sensitive data where data stewards must protect it from improper use or dissemination. Some examples of this would be any data that specifies health conditions, personally identifiable information (PII), race, religion, government IDs, etc.

Just because your organization collects some of this information during its normal course of business does not mean you can freely use it in your AI system. Just because we can do something does not mean we should do something.

There may even be legal prohibitions from using some data. Determine if there is a valid reason to include all available sensitive information and consider minimizing the amount needed for your model. Also, the data should be anonymized by stripping out or masking all PII.

Fig 1: Learn how SAS Information Catalog indicates whether the column contains potentially private information that could be linked to an individual.

Have you checked for potential sources of bias?

AI systems can certainly make our lives more productive and convenient. However, this power and speed also mean that a biased model can cause harm at scale, continuing to disadvantage certain groups or individuals.

Over the years, there have been documented cases of biased models related to facial recognition, health care, policing, etc. On a lighter note, there was even a case where an AI-powered camera repeatedly tracked the referee’s bald head instead of the soccer ball.

Checking for bias should always be part of your early development process because many types of bias can creep into your AI system: measurement bias (variables are inaccurately classified or measured), pre-processing bias (when an operation such as missing value treatment, data cleansing, outlier treatment, encoding, scaling or data transformations for unstructured data causes or contributes to systematic disadvantage), exclusion bias (systematically excluding certain groups), and availability bias (overreliance on information easily accessed).

While this is not an exhaustive list, asking these questions can get you on the right path of checking for and eliminating bias.

Is your data hiding bias in proxy variables?

You may not realize that innocent-looking variables lurking in your data are proxies for sensitive variables. Consider an example of a lending organization making credit decisions. These organizations cannot consider certain sensitive variables (e.g., race, gender, religion) when making credit decisions.

However, certain other variables, like zip code, may seem benign but can inadvertently correlate to one or more sensitive variables, acting as a stand-in or proxy variable. Aggregating values, such as aggregating zip codes into larger geographic areas, may be necessary to avoid using proxy variables.

Have you documented how your data moved and transformed from the source?

AI systems require clean, properly formatted data. Getting the right data in the correct format involves data preparation, which may require one or more of the following pre-processing steps:

- Normalization: The process of transforming features in a data set to a common scale.

- Dealing with outliers: Outliers are the data points that fall outside the expected data range. Transforming or removing them is a way to pre-process them.

- Imputation: The specific technique of filling in missing data points within a data set.

- Aggregation: The process of gathering and expressing raw data in a summary form for statistical analysis.

- Data augmentation: A process of artificially increasing the amount of data by generating new data points from existing data.

Don’t stop after you create your data set. Document the process. Documenting how your data transformed from source to AI system input is crucial for the transparency of the process. Understanding and documenting the original data sources and how the data transformed enables others to understand and even recreate the process. You should also document assumptions, rationale, constraints, and any legal or regulatory approval you received for using the data.

Have you checked if your data represents the population the system is being designed for?

Having AI systems trained on representative data is crucial for building fair and effective AI systems. Just like you need a solid foundation to construct your house, representative data forms the bedrock for an AI system.

When we use the correct training data that accurately reflects the characteristics of the population the AI system will be deployed on, it helps reduce bias, improve generalizability and foster fairness. It will be worthwhile to validate whether the data quality issues are not the source of underrepresentation.

Building a solid data foundation to pave the way for trustworthy AI

As we go through the data management phase of the AI life cycle, care must be taken to use the right data at the right time and in the right way. We must be vigilant to provide transparency, root out bias and protect the privacy of individuals, especially those of vulnerable populations.

With the intense scrutiny given to AI systems and their results, a clear plan for managing all aspects of your data is essential.

Want more? Read our comprehensive approach to trustworthy AI governance

Vrushali Sawant contributed to this article