When deploying a model to production, there are two important questions to ask:

1. Should the model return predictions in real time?

2. Could the model be deployed to the cloud?

The first question forces us to choose between real-time vs. batch inference, and the second one â between cloud vs. edge computing.

Real-Time vs. Batch Inference

Real-time inference is a straightforward and intuitive way to work with a model: you give it an input, and it returns you a prediction. This approach is used when prediction is required immediately. For example, a bank might use real-time inference to verify whether a transaction is fraudulent before finalizing it.

Batch inference, on the other hand, is cheaper to run and easier to implement. Inputs that have been previously collected are processed all at once. Batch inference is used for evaluations (when running on static test datasets), ad-hoc campaigns (such as selecting customers for email marketing campaigns), or in situations where immediate predictions arenât necessary. Batch inference can also be a cost or speed optimization of real-time inference: you precompute predictions in advance and return them when requested.

Cloud vs. Edge Computing

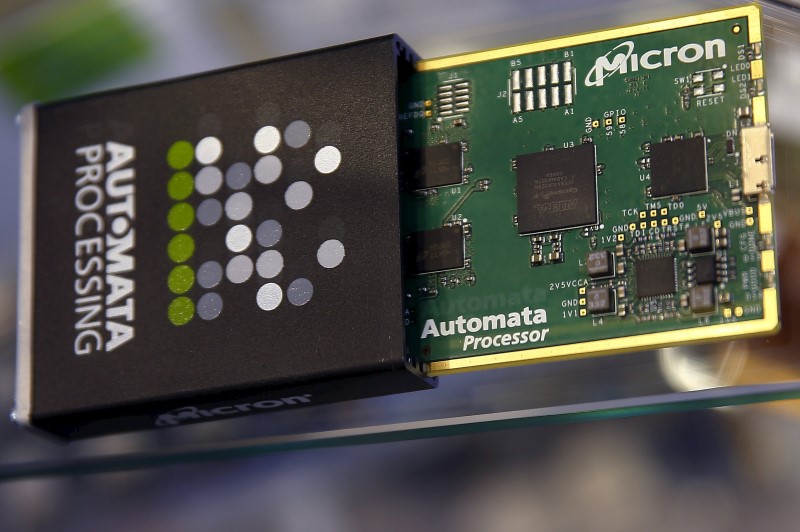

In cloud computing, data is usually transferred over the internet and processed on a centralized server. On the other hand, in edge computing data is processed on the device where it was generated, with each device handling its own data in a decentralized way. Examples of edge devices are phones, laptops, and cars.

Streaming services like Netflix and YouTube are typically running their recommender systems in the cloud. Their apps and websites send user data to data servers to get recommendations. Cloud computing is relatively easy to set up, and you can scale computing resources almost indefinitely (or at least until itâs economically sensible). However, cloud infrastructure heavily depends on a stable Internet connection, and sensitive user data should not be transferred over the Internet.

Edge computing is developed to overcome cloud limitations and is able to work where cloud computing cannot. The self-driving engine is running on the car, so it can still work fast without a stable internet connection. Smartphone authentication systems (like iPhoneâs FaceID) run on smartphones because transferring sensitive user data over the internet is not a good idea, and users do need to unlock their phones without an internet connection. However, for edge computing to be viable, the edge device needs to be sufficiently powerful, or alternatively, the model must be lightweight and fast. This gave rise to the model compression methods, such as low-rank approximation, knowledge distillation, pruning, and quantization.

Easy Deployment & Demo

âProduction is a spectrum. For some teams, production means generating nice plots from notebook results to show to the business team. For other teams, production means keeping your models up and running for millions of users per day.â Chip Huyen, Why data scientists shouldnât need to know Kubernetes

To deploy a model, you need a server (instance) where the model will be running, an API to communicate with the model (send inputs, get predictions), and (optionally) a user interface to accept input from users and show them predictions.

Google Colab is Jupyter Notebook on steroids. It is a great tool to create demos that you can share. It does not require any specific installation from users, it offers free servers with GPU to run the code, and you can easily customize it to accept any inputs from users (text files, images, videos).

FastAPI is a framework for building APIs in Python. You may have heard about Flask, FastAPI is similar, but simpler to code, more specialized towards APIs, and faster.

Streamlit is an easy tool to create web applications. It is easy, I really mean it. And applications turn out to be nice and interactive â with images, plots, input windows, buttons, sliders,⦠Streamlit offers Community Cloud where you can publish apps for free.

Cloud Platforms. Google and Amazon do a great job making the deployment process painless and accessible. They offer paid end-to-end solutions to train and deploy models (storage, compute instance, API, monitoring tool, workflows,â¦).

Like all software systems in production, ML systems must be monitored. It helps quickly detect and localize bugs and prevent catastrophic system failures.

Technically, monitoring means collecting logs, calculating metrics from them, displaying these metrics on dashboards like Grafana, and setting up alerts for when metrics fall outside expected ranges.

What metrics should be monitored? Since an ML system is a subclass of a software system, start with operational metrics. Examples are CPU/GPU utilization of the machine, its memory, and disk space; number of requests sent to the application and response latency, error rate; network connectivity.

While operational metrics are about machine, network, and application health, ML-related metrics check model accuracy and input consistency.

Accuracy is the most important thing we care about. This means the model might still return predictions, but those predictions could be entirely off-base, and you wonât realize it until the model is evaluated.

Why could model accuracy drop at all? The most widespread reason is that production data has drifted from training/test data. To automatically detect data drift (it is also called…

Source link

![The 15 Best Python Courses Online in 2024 [Free + Paid]](https://news.pourover.ai/wp-content/themes/jnews/assets/img/jeg-empty.png)